Artificial Intelligence Acceptable Use Policy

1. Purpose

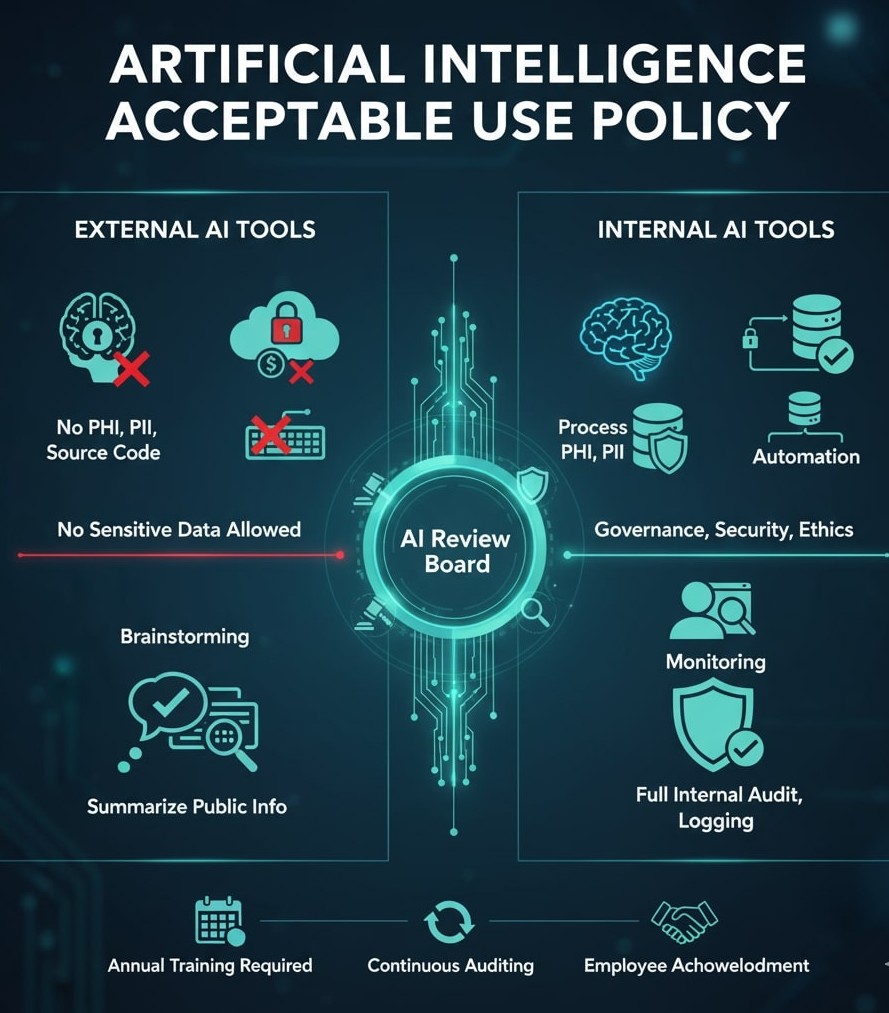

This policy establishes guidelines for the ethical, responsible, and secure use of Artificial Intelligence (AI) technologies within the company. It applies to both External AI Tools (hosted by third-party vendors) and Internal AI Tools (deployed on company infrastructure, behind a Virtual Private Network (VPN), and not accessible from outside).

The objectives are to:

- Protect sensitive company, client, and employee data.

- Ensure compliance with laws and regulations.

- Promote fairness, transparency, and trust in AI.

- Safeguard company Intellectual Property (IP).

- Provide accountability, explainability, and appeal mechanisms where AI influences decisions.

2. Scope

This policy applies to:

- All employees, contractors, consultants, and partners.

- All company-owned devices, accounts, and networks.

- All use of approved AI tools (both External and Internal).

3. Definitions

- External AI Tools: Third-party, cloud-hosted AI platforms (e.g., ChatGPT, Microsoft Copilot, Google Gemini, Claude, Apple Intelligence).

- Internal AI Tools: AI models and systems hosted on company servers or private cloud infrastructure, accessible only via VPN or secured networks.

- Protected Health Information (PHI): Medical information protected under HIPAA.

- Personally Identifiable Information (PII): Personal data defined under federal and state privacy laws.

- Proprietary Data: Confidential business data including client information, financial records, trade secrets, and internal strategies.

- Intellectual Property (IP): Creations of the mind (designs, inventions, brand assets) owned by the company.

- AI Incident: Any event where an AI system causes or risks causing harm, bias, data exposure, or unauthorized actions.

4. Governance and Roles

- An AI Review Board (ARB) is established to approve new use cases, risk classification, and exceptions.

- Defined roles: Policy Owner, AI Product Owner, Data Protection Officer, Security Lead, and Legal Representative.

- The ARB maintains a register of approved models, datasets, evaluations, and retired models.

- Continuous auditing is required to monitor compliance, security, and fairness.

5. Approved AI Systems

External AI

- Only tools approved by IT and management may be used (e.g., ChatGPT Enterprise, Microsoft Copilot, GitHub Copilot).

- Vendor due diligence and ongoing monitoring required, including annual reviews, compliance checks, and breach notifications.

Internal AI

- Company-developed or hosted models (e.g., LLaMA, Mistral, Falcon, proprietary fine-tuned models).

- Access is limited to authenticated users via VPN or Single Sign-On (SSO).

- Updates, retraining, and security patches are managed by IT and the AI governance team.

6. Data Usage Rules

External AI

- Do NOT input PHI, PII, financial records, proprietary data, or source code.

- Permitted uses: brainstorming, summarizing public information, idea generation, coding best-practice queries without exposing sensitive code.

- All third-party data sharing must undergo explicit review and approval.

Internal AI

- May process PHI, PII, financial, and proprietary data under approved governance controls.

- Allowed uses: analytics, automation, internal reporting, and decision support.

- Must not connect to external networks or APIs without explicit approval.

- Prompts and outputs must be logged for auditing and retained for at least 90 days.

- Employees and clients have rights under GDPR and similar standards to request data deletion, rectification, or export.

7. Use Case Risk Tiering

AI use cases must be classified:

- Low Risk: General brainstorming, public data only.

- Medium Risk: Proprietary internal data, automation with moderate impact.

- High Risk: Any use of PHI, PII, or customer-facing decision systems.

Medium/High risk cases require ARB approval.

8. Security and Assurance

External AI

- Access restricted to corporate accounts.

- Employees must assume vendor servers may store queries.

- Vendors must undergo annual security audits and notify of policy or compliance changes.

Internal AI

- All usage requires authentication (VPN/SSO).

- Usage is logged and monitored.

- Models must undergo Testing, Evaluation, Verification, and Validation (TEVV), including red-teaming against prompt injection.

- Lifecycle management required: documentation, retraining schedules, archival of retired models.

- Environmental impact of model training and deployment must be considered and minimized where possible.

9. Content Review, Transparency & Explainability

- All AI-generated content must be reviewed by a human before use.

- Client-facing deliverables require manager or peer review.

- Employees must disclose when AI was used in deliverables if required by law or contract.

- AI-generated media must include labels or watermarks where possible.

- AI systems used for decisions must provide clear, understandable explanations of outcomes when requested.

10. Bias, Ethics, and Responsible Use

- Employees must check outputs for harmful bias or discrimination.

- AI systems must undergo periodic fairness and bias audits.

- AI must not be used for malicious, fraudulent, or deceptive purposes.

- AI must not be used to harass, manipulate, or bypass compliance controls.

10a. AI Voice Usage

Disclosure and Transparency

- All AI-generated voice content must be clearly disclosed as synthetic when used externally.

- Employees may not use AI voice systems to impersonate any individual without explicit written consent.

Consent and Privacy

- Personal voice recordings may only be used to train AI models with prior documented consent.

- Unauthorized capture, synthesis, or replication of an individual’s voice is prohibited.

Acceptable Use

- Permitted: training, accessibility, customer support, marketing (with disclosure), and productivity applications.

- Prohibited: fraud, harassment, deepfakes, misinformation, or any deceptive use.

Security Controls

- AI voice files and training data must be stored only on approved platforms and subject to logging and monitoring.

- Access is restricted to authorized users with a legitimate business need.

10b. AI Graphics Usage

Attribution and Disclosure

- AI-generated images, graphics, or videos must be clearly labeled where reasonably possible.

- AI content must not be presented as authentic or factual without appropriate disclosure.

Intellectual Property and Copyright

- Do not generate graphics that infringe on copyrighted works, trademarks, or brand assets without permission.

- All AI-generated media is company IP unless otherwise stated.

Consent and Representation

- Creation of AI graphics depicting employees, clients, or partners requires explicit written consent.

- Prohibited: generating offensive, discriminatory, defamatory, or harmful images.

Acceptable Use

- Permitted: marketing prototypes, internal design, educational materials, and creative exploration.

- Prohibited: medical/legal graphics, misleading imagery, or any use that risks reputational damage without review/approval.

Security and Integrity

- Only company-approved AI graphics platforms may be used.

- Generated content must be stored in designated repositories with appropriate audit logging.

11. Automated Decision-Making & Appeals

- If an AI system makes a decision affecting employees or customers, individuals have the right to request human review and appeal the outcome.

- Managers must ensure a documented appeals process is in place.

12. AI Incident Response and Reporting

- AI Incidents include data leaks, harmful outputs, unauthorized use, or ethical breaches.

- Employees must report incidents immediately through a dedicated hotline or secure digital form.

- Whistleblower protections apply to employees raising concerns.

- Investigations must begin within 48 hours, and an incident register maintained.

13. Intellectual Property and Ownership

- All outputs from approved AI systems are company IP.

- Copyrighted third-party materials must be used within licensing limits.

- No employee may expose customer proprietary data to external AI systems.

14. Compliance and Regulations

- External AI: Must comply with GDPR, HIPAA, and vendor terms.

- Internal AI: Must comply with NIST AI RMF, ISO 23894, and sector-specific laws.

- EU operations must align with the EU AI Act, including prohibited practices and risk classification.

- Continuous auditing required to adapt to new laws and risks.

15. Monitoring and Enforcement

- All AI activity may be monitored.

- Violations include: use of unapproved AI systems, inputting restricted data into External AI, misuse of Internal AI without authorization.

- Disciplinary action may include warnings, loss of access, or termination.

16. Training and Continuous Education

- Employees must complete annual Responsible AI training.

- Microlearning modules will be provided when significant new risks, laws, or tools are introduced.

- Quarterly refreshers required for teams working directly with AI systems.

17. Document Review

- This policy will be reviewed annually, with quarterly reviews for Internal AI governance.

- Continuous monitoring and proactive audits required to ensure compliance.

- Updates will be communicated promptly to all employees.

18. Employee Acknowledgment

I acknowledge that I have read and understood the Artificial Intelligence Acceptable Use Policy. I agree to comply with all rules regarding External and Internal AI Tools, data handling, governance, and ethical use. I understand that violations may result in disciplinary action, including termination.

External vs Internal AI — Summary

| Category | External AI Tools (Online) | Internal AI Tools (Local/VPN-Based) |

|---|---|---|

| Approval & Access | Approved cloud vendors only; access via corporate accounts; vendor logs possible. | Company-hosted; access restricted to VPN/SSO; logs stored internally. |

| Data Usage | No PHI, PII, financial, source code, or proprietary data allowed. | PHI, PII, financial, and proprietary data may be processed under governance rules. |

| Vendor Management | Vendor due diligence and annual monitoring; vendors must notify of policy changes. | Managed internally by IT/AI governance with full control of updates and patches. |

| Security | Vendor-controlled infrastructure; limited ability to enforce encryption, retention, or audit depth. | Full internal control: TEVV, red-teaming, encryption at rest and in transit, monitoring. |

| Transparency & Explainability | Transparency limited to vendor disclosures; explainability not always guaranteed. | Company responsible for explainability; provide plain-language explanations for critical decisions. |

| Bias & Ethics | Employees must review for bias; vendor audits may be limited. | Mandatory fairness audits, bias checks, and documentation of mitigations. |

| Decision-Making & Appeals | Not to be used for automated high-impact decisions affecting individuals. | If used in HR, finance, or customer service, must provide human review and appeals process. |

| Data Rights | Cannot guarantee GDPR-style deletion/export; vendor retention policies apply. | Must provide rights for deletion, rectification, and export of data (GDPR compliance). |

| Lifecycle Management | Vendors manage their models; limited company influence. | Full lifecycle: design → deployment → monitoring → retraining → archival/decommissioning. |

| Environmental Impact | Outside company control; vendor may or may not consider sustainability. | Teams must document and minimize energy use; prefer efficient training and hosting. |

| Monitoring & Enforcement | Compliance audits based on vendor reports; external oversight required. | Continuous internal monitoring, quarterly audits, proactive governance. |